AI-Driven Real Time

Sign Language Translate App

HandsTalk

HandsTalk is a mobile app that uses advanced computer vision technology and Generative AI (Large Language Model) to provide real-time translation from sign language to text. It utilizes built-in cameras without the need for specialized hardware, making it accessible to a wider audience.

Current Progress

We are collaborating with HKSTP and HKUST as a startup (InnovateAI Ltd.). HandsTalk began as a university student project focused on American Sign Language translation.

We have now paused work on English Sign Language translation to concentrate on Cantonese Sign Language translation, especially for medical applications.

Our aim is to create a real-world solution. In the future, we plan to explore other themes and aspects of Cantonese Sign Language translation.

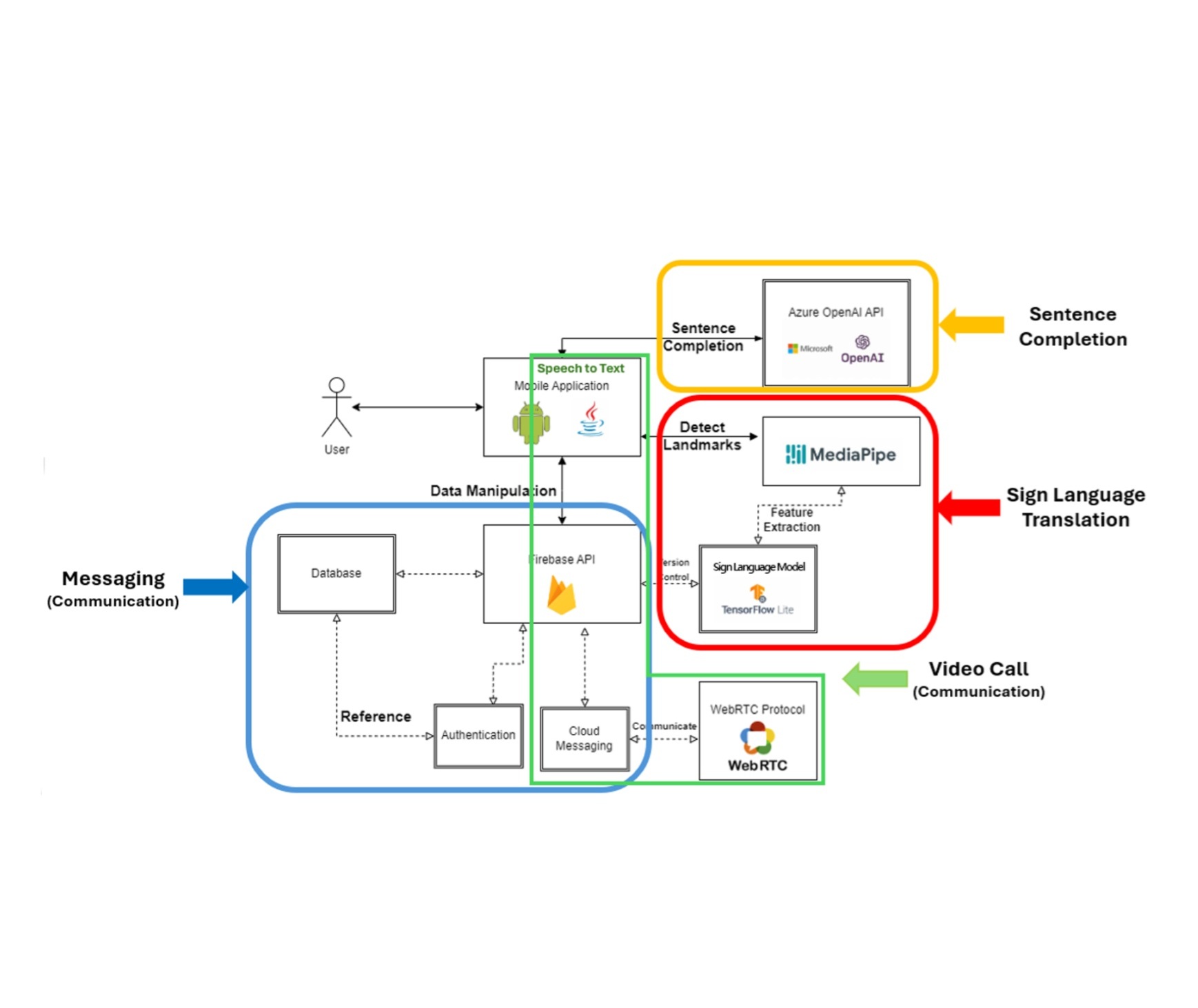

System Architecture

HandsTalk is composed of four main components that work together to facilitate effective communication:

- Seamless User Interface

- Real-time Body Posture Detection

- Sign Language Deep Learning

- AI-Driven Sentence Completion

Sign Language Translation (Sentences)

HandsTalk not only translates the words of sign language, but also uses Generative AI (Large Language Model) to convert identified words into coherent sentences for precise translation. It effectively handles the grammar and structure of sign language.

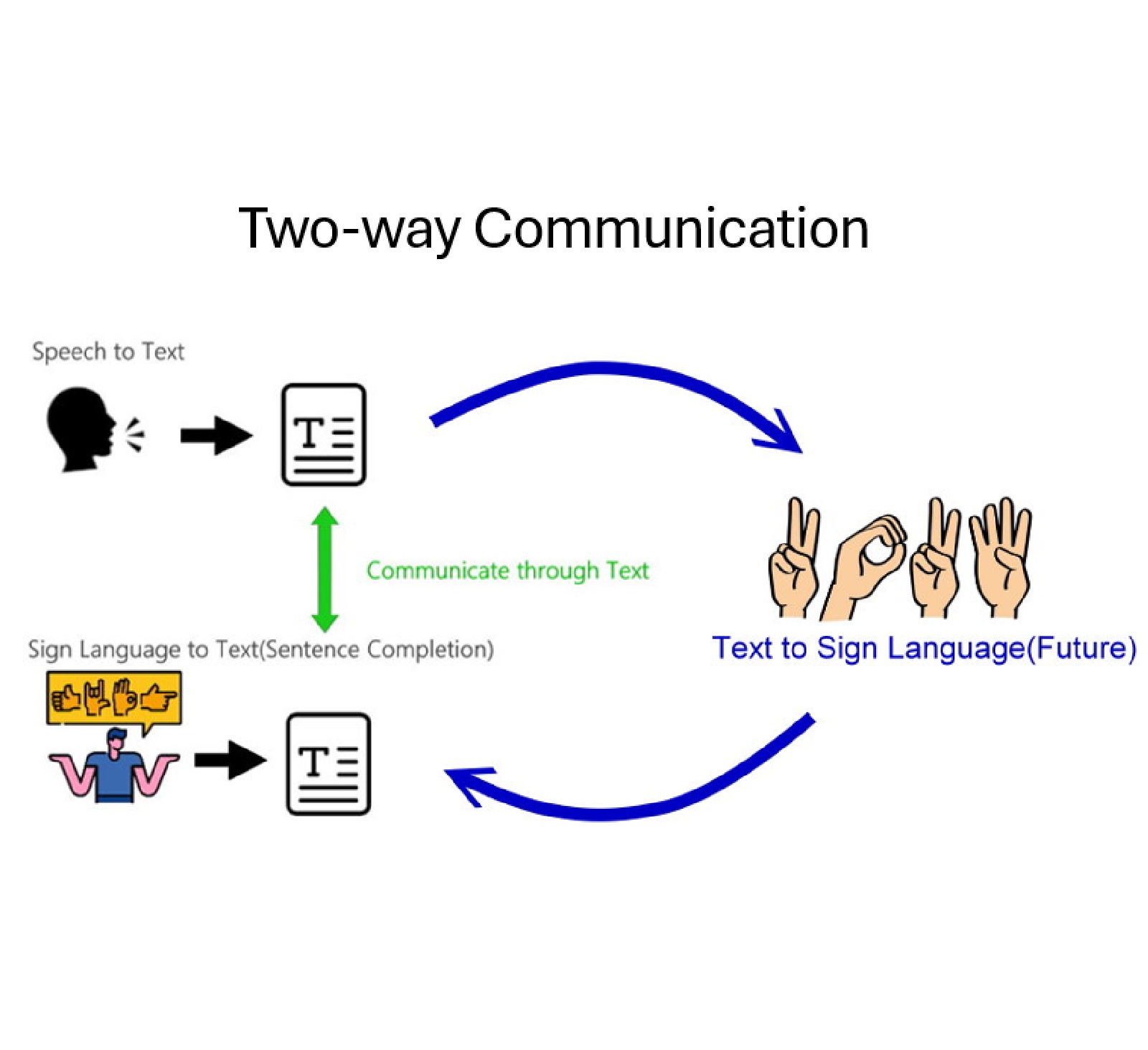

Two-way Communication

HandsTalk aims to enable both signers and non-signers to communicate effectively, allowing each to use their own methods of communication. Non-signers can communicate without needing to learn sign language.

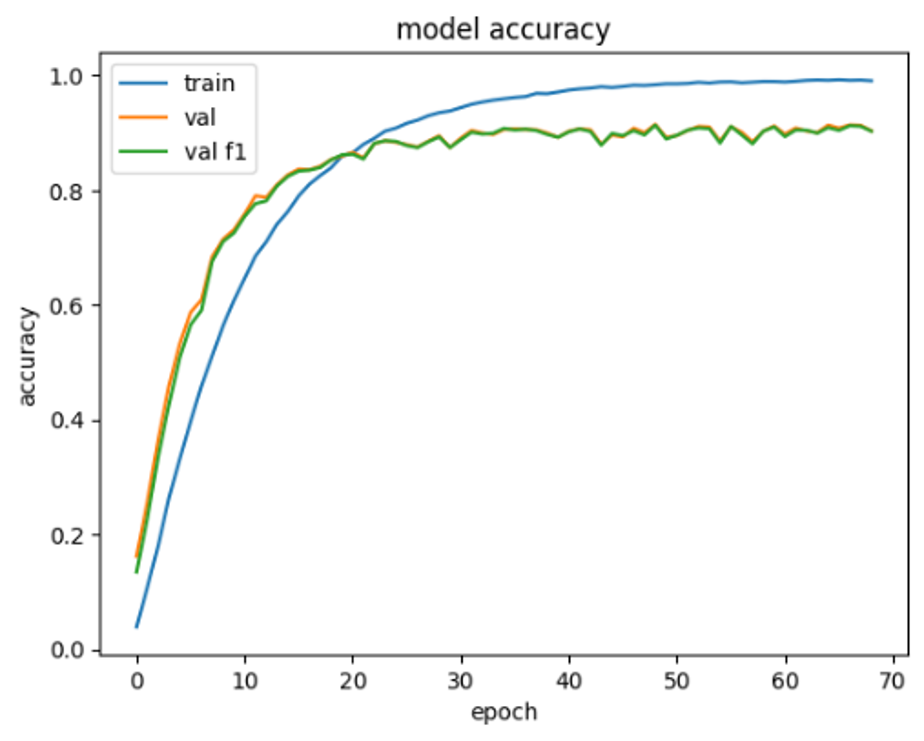

Translation AI Model

We used a dataset consisting of 100 words and phrases, including 26 alphabets, for training. Our trained model boasts an impressive accuracy of 91.8%, meaning it correctly predicts most of the signs.

In real-world scenarios, the model also demonstrates proficiency in recognizing a significant portion of the trained words, phrases, and alphabets.

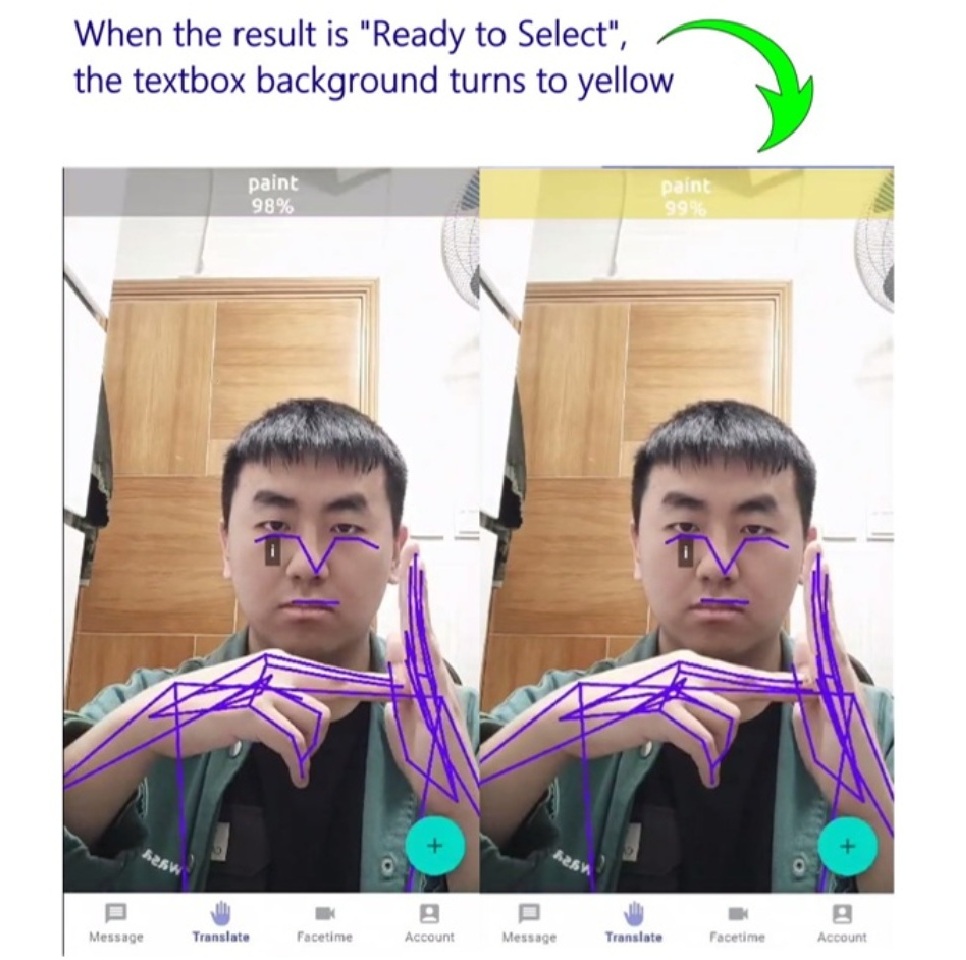

“Select” by users

When the app identifies words or phrases from the users, the results appear on the screen, and the upper background turns yellow, indicating that this translated result is “Ready to Select.”

If both of the user’s hands are out of the screen, the “select” action is triggered to ensure the translation is correct.

Real-Time AI Translation

HandsTalk employs advanced computer vision technology to offer precise real-time translation from sign language to text. Generative AI (Large Language Model) is utilized to refine the detected sign language into coherent sentences.

Seamless Platform

We provide a seamless platform for sign language learners to improve their skills and for sign language users to communicate, with a user-friendly interface.

Data-driven Insights

Train the sign language translation model by using deep learning.

Gratitude to WLASL for Their Invaluable Resource

Our prototype for American Sign Language Translation was developed using the WLASL dataset, the largest video dataset for Word-Level American Sign Language (ASL) recognition, as part of our dataset for model training. We would like to express our gratitude to the creators of WLASL for making this resource available.